Description

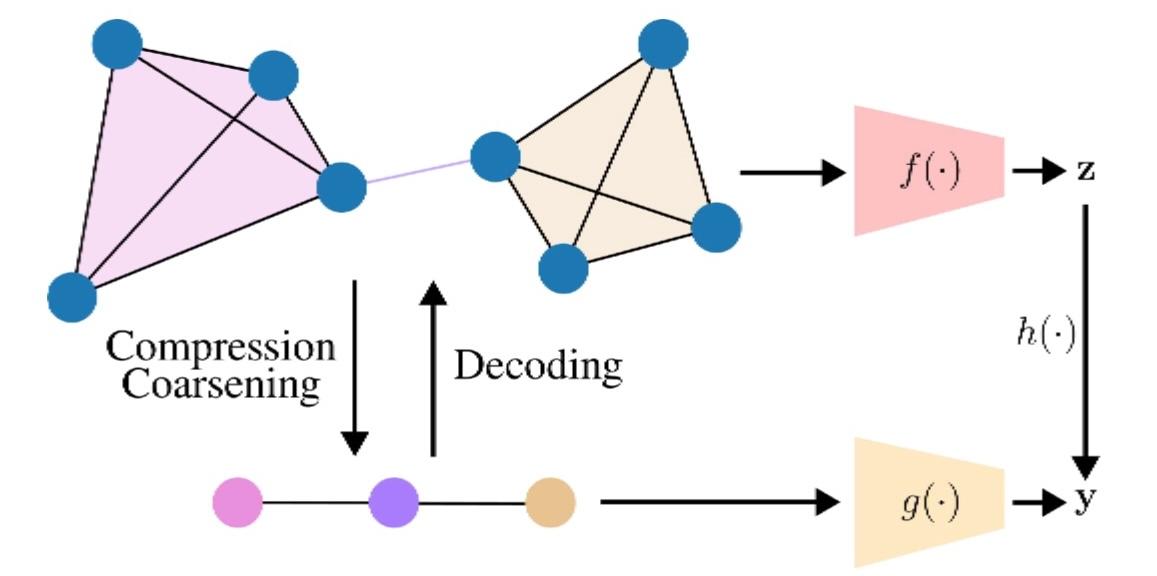

Cardiovascular diseases remain the leading cause of mortality worldwide (WHO,2019). In particular, coronary artery disease often requires coronary artery bypass grafting, a highly technical procedure requiring more than 15 years of training and relying on wet-labs, simulators and direct observation in the operating room. These traditional training methods suffer from limited availability, reproducibility and interactivity. This project proposes a technological and methodological rupture to overcome these limitations based on the cross fertilization between immersive (enabling safe, repeatable and personalized training experiences [1]) and generative (creation of artificial and personalized visual and artificial content [2], [3], [4]) technologies for cardiac surgery. The objective is to design a generative digital twin of cardiac surgery, capable of reconstructing [4], simulating [5], [6], and enriching real surgical interventions for immersive and adaptive training [7], [8]. To that end, the core ambition is to build a controllable virtual environment generated from real multimodal data — videos, depth, motion, and sound — combined with text-to-3D generative AI [2], [9], [10]. The system will enable trainees to visualize, interact with, and rehearse surgical gestures in extended reality, while dynamically adapting to user expertise or scenario variations.

Introducing generative modeling to transcend the limitations of current handcrafted simulators and scripted experiences involves several challenges. Currently, the use of Extended Reality (XR) in cardiac surgery remains restricted to serious game or teleportation [1] due to the lack of publicly available open-surgery dataset [8], [11]. Next, computer vision and generative AI methods to reconstruct and analyze dynamic 3D scenes can be limited by constrains such as deformable tissues, occlusions, and lighting variability [6], [12], [13]. To that end, it will build upon recent advances in neural scene representations [9] and differentiable point-based rendering [13], combining implicit and explicit modeling approaches [3] with text-to-3D generative diffusion, to produce semantically controllable and anatomically faithful environments [2], [10], [14], [15]. In the medium term, we will be able to automatically generate realistic and customizable scenarios from text and image prompts while considering the previous constraints[6].

The project involves several actors specialized in (i) computer vision and VR/AR (ARMEDIA, SAMOVAR, Télécom SudParis); (ii) models for generative AI (SOP, SAMOVAR, Télécom SudParis) and (iii) cardiac surgery with expertise in XR (Pr. Patrick Nataf, INSERM). Consequently, a database from surgeon-view has already been partially acquired. The PhD student will extend and optimize the capture setup, develop pre-processing and annotation pipelines (segmentation, summarization, description, medical ontology), fine-tune and implement generative models for reconstruction and scene synthesis and develop a multimodal-prompt-based 3D generation engine for surgical scenario. The expected outcome is a proof-of-concept AI-driven cardiac training simulator integrated into existing XR infrastructures at INSERM. The different teams have been approached by P. Spinga, a former student of Télécom SudParis, ranked 16/195 (1st year) and 8/241 (2nd year) and a former research engineer at Dassault Systèmes - Healthcare department.

In conclusion, this research aims at opening the way toward a complete digital twin of cardiac surgery — a cognitive and perceptual companion that could one day assist surgeons before, during, and after interventions, transforming both medical education and the future of surgery itself [7].

Bibliography

Bibliography

[1] M. Queisner, C. Remde, et M. Pogorzhelskiy, « VolumetricOR: A New Approach to Simulate Surgical Interventions in Virtual Reality for Surgical Education », 18 octobre 2019, Humboldt- Universität zu Berlin. doi: 10.18452/20470.

[2] T. Yi et al., « GaussianDreamer: Fast Generation from Text to 3D Gaussians by Bridging 2D and 3D Diffusion Models », 13 mai 2024, arXiv: arXiv:2310.08529. doi: 10.48550/arXiv.2310.08529.

[3] P. Pan et al., « Diff4Splat: Controllable 4D Scene Generation with Latent Dynamic Reconstruction Models », 1 novembre 2025, arXiv: arXiv:2511.00503. doi: 10.48550/arXiv.2511.00503.

[4] B. G. A. Gerats, J. M. Wolterink, et I. A. M. J. Broeders, « NeRF-OR: neural radiance fields for operating room scene reconstruction from sparse-view RGB-D videos », Int J CARS, vol. 20, no 1, p. 147‑156, sept. 2024, doi: 10.1007/s11548-024-03261-5.

[5] Z. Yang, K. Chen, Y. Long, et Q. Dou, « SimEndoGS: Efficient Data-driven Scene Simulation using Robotic Surgery Videos via Physics-embedded 3D Gaussians », 6 août 2024, arXiv: arXiv:2405.00956. doi: 10.48550/arXiv.2405.00956.

[6] Y. Wang, B. Gong, Y. Long, S. H. Fan, et Q. Dou, « Efficient EndoNeRF Reconstruction and Its Application for Data-driven Surgical Simulation », 10 avril 2024, arXiv: arXiv:2404.15339. doi: 10.48550/arXiv.2404.15339.

[7] Z. Rudnicka, K. Proniewska, M. Perkins, et A. Pregowska, « Health Digital Twins Supported by Artificial Intelligence-based Algorithms and Extended Reality in Cardiology », Electronics, vol. 13, no 5, p. 866, févr. 2024, doi: 10.3390/electronics13050866.

[8] Y. Frisch et al., « SurGrID: Controllable Surgical Simulation via Scene Graph to Image Diffusion », Int J CARS, vol. 20, no 7, p. 1421‑1429, mai 2025, doi: 10.1007/s11548-025-03397-y.

[9] J. Zhang, X. Li, Z. Wan, C. Wang, et J. Liao, « Text2NeRF: Text-Driven 3D Scene Generation with Neural Radiance Fields », 31 janvier 2024, arXiv: arXiv:2305.11588. doi: 10.48550/arXiv.2305.11588.

[10] J. Shriram, A. Trevithick, L. Liu, et R. Ramamoorthi, « RealmDreamer: Text-Driven 3D Scene Generation with Inpainting and Depth Diffusion », 11 mars 2025, arXiv: arXiv:2404.07199. doi: 10.48550/arXiv.2404.07199.

[11] T. Zeng, G. L. Galindo, J. Hu, P. Valdastri, et D. Jones, « Realistic Surgical Image Dataset Generation Based On 3D Gaussian Splatting », 20 juillet 2024, arXiv: arXiv:2407.14846. doi: 10.48550/arXiv.2407.14846.

[12] A. Lou, Y. Li, X. Yao, Y. Zhang, et J. Noble, « SAMSNeRF: Segment Anything Model (SAM) Guides Dynamic Surgical Scene Reconstruction by Neural Radiance Field (NeRF) », 6 février 2024, arXiv: arXiv:2308.11774. doi: 10.48550/arXiv.2308.11774.

[13] A. Guédon et V. Lepetit, « SuGaR: Surface-Aligned Gaussian Splatting for Efficient 3D Mesh Reconstruction and High-Quality Mesh Rendering », 2 décembre 2023, arXiv: arXiv:2311.12775. doi: 10.48550/arXiv.2311.12775.

[14] Y. Huang et al., « SurgTPGS: Semantic 3D Surgical Scene Understanding with Text Promptable Gaussian Splatting », 1 juillet 2025, arXiv: arXiv:2506.23309. doi: 10.48550/arXiv.2506.23309.

[15] U. Singer et al., « Text-To-4D Dynamic Scene Generation